PERTURBO capabilities

Electronic interactions

- Electron-phonon (e-ph) matrix elements in the Bloch and Wannier bases

- Long-range electron-phonon interactions: dipole / Frohlich (polar materials) and quadrupole (both polar and nonpolar materials)

- E-ph interactions in the presence of spin-orbit coupling and spin-collinear magnetism

- E-ph interactions within DFT+U for correlated materials

- E-ph interactions using anharmonic phonons (from TDEP or SSCHA)

- Electron-defect interactions, for both neutral and charged defects / impurities

Transport

- Electron relaxation times resolved by electronic state (and if desired, by phonon mode)

- Electron mean free paths

- Conductivity, resistivity, and mobility as a function of temperature and chemical potential (phonon-limited, defect-limited, or both mechanisms)

- Seebeck coefficient

- Transport in high electric fields and velocity-field curves

- Magnetotransport: magnetoresistance, Hall coefficient, and Hall mobility (no Berry curvature corrections)

- Spin relaxation times as a function of temperature (from e-ph interactions, within Elliot-Yafet theory)

- Cumulant method for large polarons: calculation of electronic spectral functions

Nonequilibrium / ultrafast dynamics

- Ultrafast electron and hole dynamics with fixed phonon occupations (real-time Boltzmann equation formalism)

- Simulation of ultrafast spectroscopies and pump pulses

Software features and implementation

- YAML and HDF5 output format

- MPI plus openMP parallelization

- GPU acceleration, for both transport and ultrafast dynamics calculations

- Compatibility with Quantum Espresso v7.3

- Compatibility with VASP and other DFT codes (if input data is provided in correct format)

- Both norm-conserving and ultrasoft pseudopotentials (no PAWs for now)

Implementation details

PERTURBO is written in modern Fortran95 with hybrid parallelization (MPI, OpenMP, and OpenACC). The main output formats are HDF5 and YAML, which are portable from one machine to another and are convenient for postprocessing using high-level languauges (e.g., Python). PERTURBO has a core software, called perturbo.x, for the electron transport calculations and an interface software, called qe2pert.x, to read output files of Quantum Espresso (QE, versions 6.4, 6.5, 7.0, 7.2, 7.3) and Wannier90 (W90, version >= 3.0.0). The qe2pert.x interface software generates an HDF5 file, which is then read from the core perturbo.x software. In principle, any other third-party density functional theory (DFT) codes (e.g., VASP) can use PERTURBO as long as the interface of the DFT codes can prepare an HDF5 output file (called prefix_epr.h5) for PERTURBO to read.

Code Performance and Scaling / Parallelization

This section discusses the scaling performance of the publicly available version of PERTURBO. Since its inception, PERTURBO implemented a hybrid MPI / OpenMP parallelization that allows for outstanding scaling on high-performance computing (HPC) platforms. Starting PERTURBO v3.0, the GPU acceleartion is supported for both transport and ultrafast dynamics calculations.

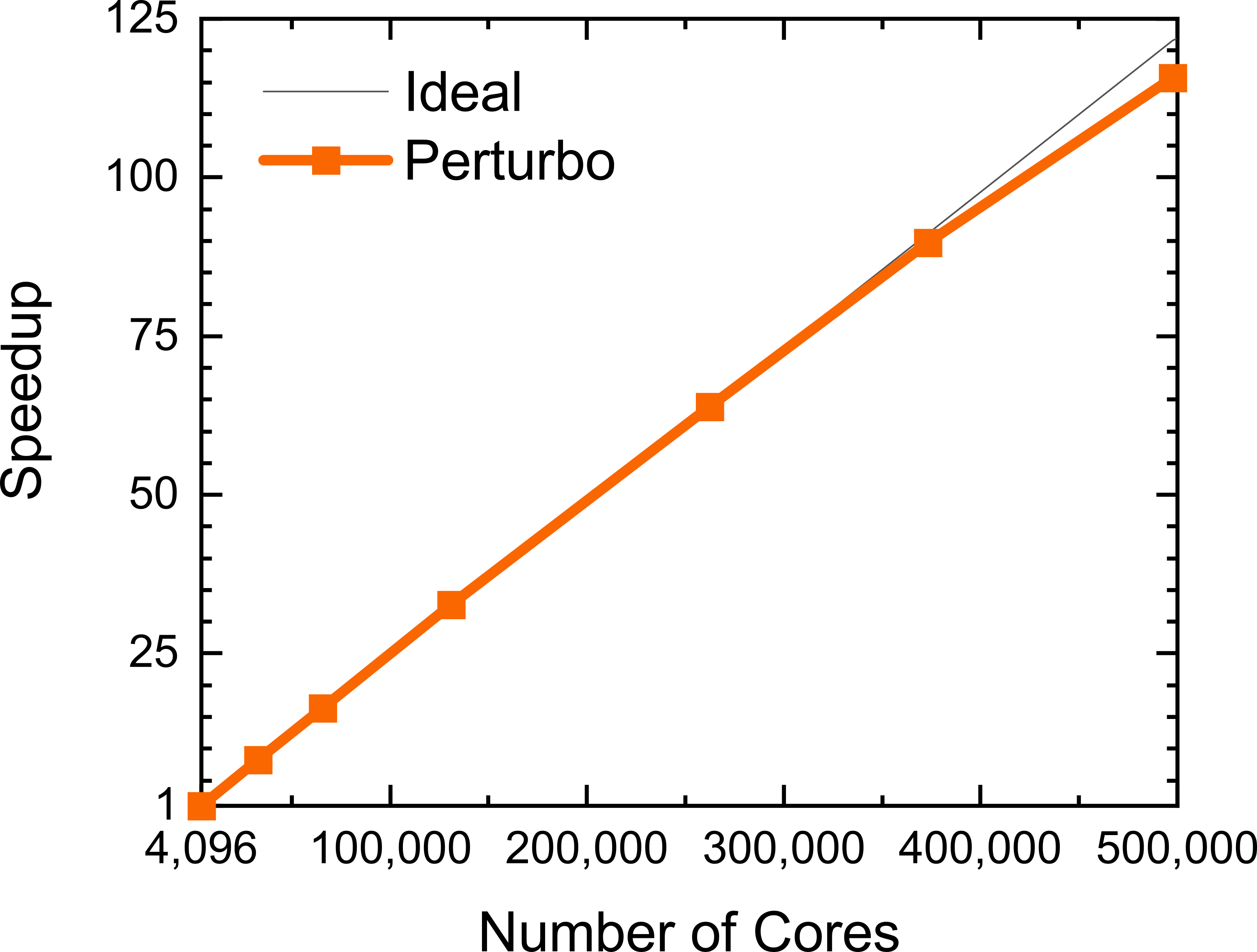

Scaling performance on CPUs

To showcase the scaling performance, we present a calculation of the imaginary part of the electron-phonon self energy (calculation mode imsigma) in silicon using 72x72x72 electron k- and phonon q-point grids. The scaling test was performed using the Intel Xeon Phi 7250 Processors at the National Energy Research Scientific Computing Center (NERSC). As seen from this figure, PERTURBO shows an almost linear scaling up to 500,000 cores (the deviation from the linear scaling at 500,000 cores is less than 5%).

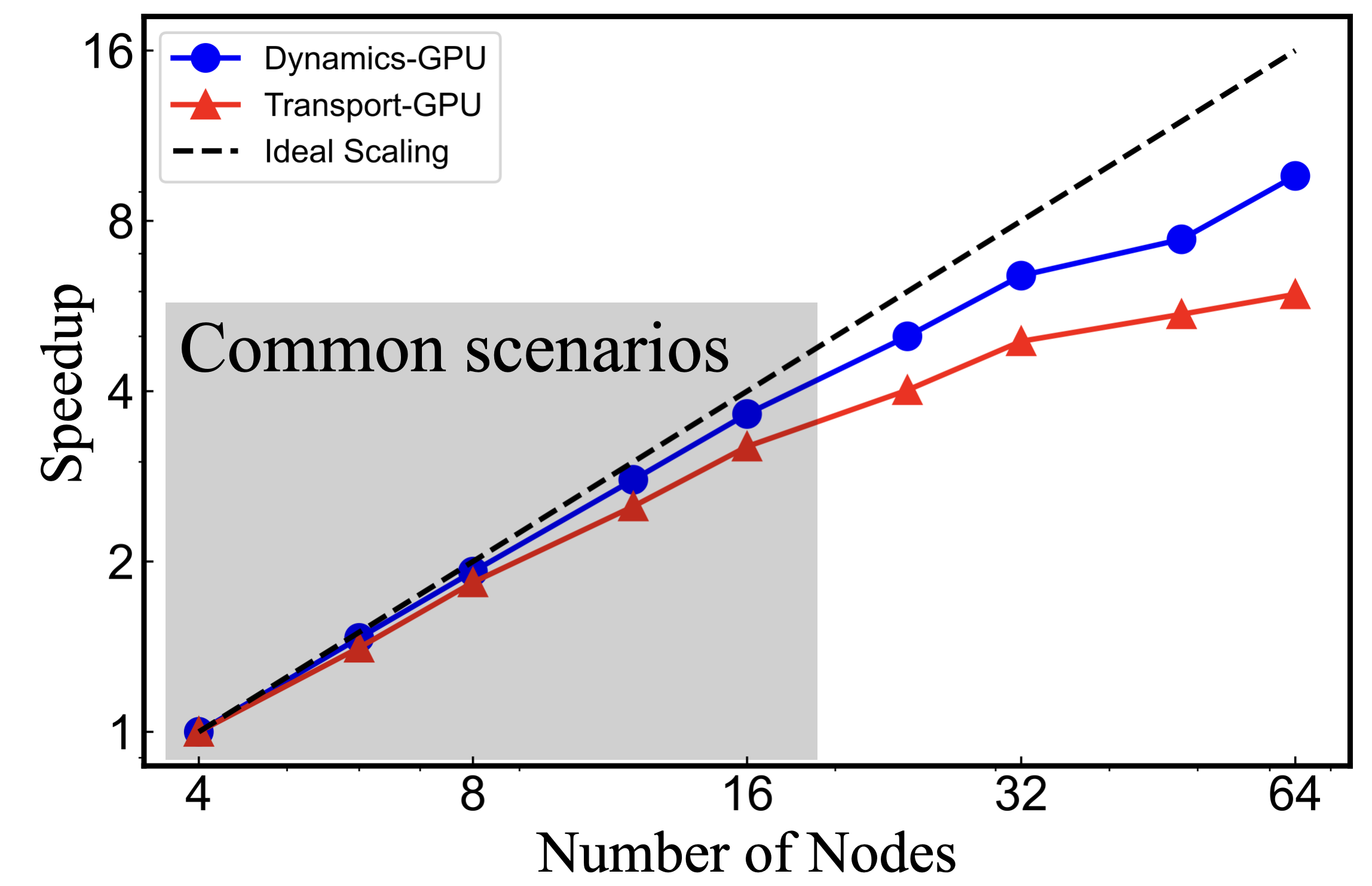

Scaling performance on GPUs

For the GPU scaling test, we present calculations of transport ITA and ultrafast dynamics in GaAs using 195x195x195 electron k- and phonon q-point grids. The scaling test was perfformed on the heterogeneous GPU nodes of the Perlmutter cluster at NERSC, with each node consisting of one CPU (AMD EPYC 7763) with 64 cores and four GPUs ( Nvidia A100 with 40GB). As seen from the figure, PERTURBO exhibits near-ideal scaling up to 20 nodes (80 GPUs) for dynamics, with a slight decrease for transport. These results show the preparedness of PERTURBO for the future HPC architectures and for the Exascale computing.

Research Team

The PERTURBO code is developed in Prof. Marco Bernardi’s research group at Caltech. For more information, we invite you to visit the group website.